Migrating Oracle Database to SQL Server: Lessons from the Trenches

Do you need to migrate an Oracle database to SQL Server? Or maybe you are facing that prospect? Recently we had to migrate an Oracle database to SQL Server and move it to the Microsoft Azure Cloud and, having been through the whole process from start to finish, we have learnt some very good and useful lessons. In this article I would like to share some of the more important points about the whole process. I hope you will find it useful.

Why?

Before we get into the details, it is important to answer the question why. Why migrate anyway? What is wrong with leaving it as is on Oracle? There could be many good answers to this question. It cold be that you want to bring some uniformity to the kinds of technology you are using in your company and Oracle falls outside that. It could be cost, because Oracle is significantly more expensive that SQL Server. It may be compatibility issues between systems, even skill within the team is stronger on the SQL Server side than Oracle. Whatever the reason why you want to migrate, I am sure you will find this article useful.

Data Types

One of the major potential headaches that you will have, are the data types. When you migrate between the two worlds, the data types do not exactly match, so you have to use whichever is the nearest or best suited. Now if you think about it, NUMBER(1), NUMBER(9) and NUMBER(10) would all end up as different types in code. Using C#, you have short, int and long there. When you have someone, just add new fields in a hurry with data type NUMBER (because that will do), then when you come to migrate the database, the mist-matches will be painful to unpick. Especially, when those fields are also used as keys for referential integrity. Those relationships will fail during the automatic schema migration. The lesson here, is to be very strict on data types and keep them 100% consistent between all tables and references. The suggestion here is to start tidying up the data types in your Oracle database before you start migration. It will make it so much easier.

Tools

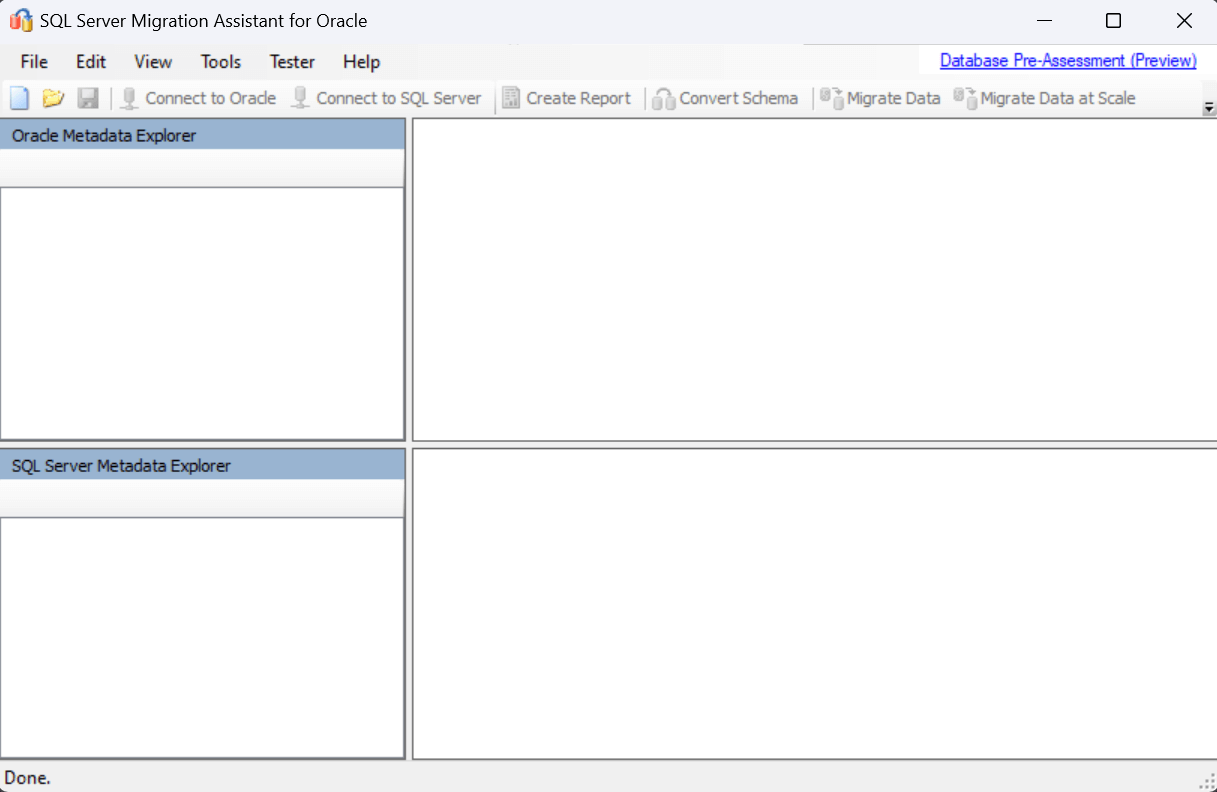

Don’t be afraid to use tools. We used the Microsoft SQL Server Migration Assistant for Oracle. There are plenty of tutorials online about how to use it. It made the whole task so much simpler. It also gives you a list of errors which you can then fix manually after the automatic migration. Make sure you understand every error message and what it is highlighting and then make a decision how you will resolve it, whether you go back to the Oracle database and make some changes there so the migration assistant will no longer raise the error, or whether you will fix it in the SQL Server after the migration. Either way, make sure it is on your action list (migration script) to make sure it does not get missed.

If you have some triggers in your Oracle database, that will cause SQL Server Migration Assistant to add an extra column against the table(s) that have triggers. Consider if you really need triggers and if you do, then be aware of the extra column that it adds to the table.

The Database Size

The Oracle database will probably be much smaller in size. Once you get it across to SQL Server it is going to be much bigger, if not two to three times bigger. So, if you are doing the migration on a different machine than the target environment, then remember, you will need to copy it across to the target environment and depending on the size of the database, it may take a while. If you have to (and probably you will have to) have the system down during this time, then you better have a good network connection. I would recommend doing the migration tasks on a machine near the target machine, so if you want to ultimately host it in the cloud, or a VM in the cloud, do the migrations tasks on a VM on the same Sub-Net so that you can get the migrated DB across nice and quick. It will reduce down-time. Things like shrinking the database and zipping it, will make the .Bak file smaller and quicker to copy across.

Load Testing

SQL Server Databases have a reputation of being slower than Oracle. The reputation is not altogether fair, because a very well optimised and finetuned SQL Server Database can really perform at similar levels as Oracle. But still, it is important to do some load-testing before going live, because you will find if it can handle the same load or not. Without that, you could end-up with loads of deadlocks and complaints about slowness on the first day after going live. Just simulate in code loads of requests and you will find out if it is a problem but concentrate on functionality that is most used so to avoid interruption in business.

Isolation Level

SQL Server Databases of late provide the option of choosing the Isolation Level (IL). Read Committed is the default IL, but it is worth looking at the Snapshot IL. If it is a good fit for you, then turning it on at the beginning is the best option rather than later. It does however require some changes on the code side from the software developers to ensure there is adequate retry logic in the code. It will give you great performance because of the low locking, but it will increase the likelihood of SQL Exceptions which requires retries. It will also increase the Temp DB a bit because it uses it for the row versioning register. If your database does more reading than writing, then this is a definite option to consider.

Deadlocks

We found that the deadlocks increased dramatically after the migration. The first day we had loads of them, so we had to get some indexes created, which helped a lot. Also, we were using Dapper ORM for this particular application and with Dapper, if you do not define the string parameters properly, then it kills the performance. If you are passing a string to the server as a parameter in Dapper, then tell Dapper if it is ANSI, or if it is fixed length, etc. It will help a great deal and increase the performance. In the end using a package like Polly does help since theoretically, there is no way really to completely remove all potential for deadlocks.

Indexes

Indexes saved us from the many deadlocks, but be careful with indexes, because they take space. Sometimes, it is better to change the SQL statement than just add another index. Can the SQL be optimised, changed, or can you change the structure of the table. Don’t go for the index every time. But it is safe to say, a good index will really speed things up and the performance increase will be noticeable. Using the SQL Server Management Studio (SSMS) to manually run the SQL Statement and see if the SSMS gives you a pointer about the need for an Index. We used those suggestions and created indexes and it did improve things.

Dry runs

Do as many dry runs as you can. It will really give you confidence. Also, time the dry runs, it will help you inform the stakeholders how long it should take on the night (notice I said ‘night’) and then use that as a benchmark to make a decision for how much down-time you can handle. By doing the dry runs and documenting very well each step will result in a comprehensive script of steps for the real m2igration run. We learnt from every dry-run we did, so there really isn’t “too many dry runs”. And do them on the target (or similar to the target) platform / environment.

Side Idea

If you use a queue between the app and the database, then the app may remain responsive to some extent during the downtime since all requests will be successfully placed on a queue and then when the Database is back online, all the messages will be inserted in a burst. This is a side-idea and may not be useful to you, but it is worth considering, especially if your application is mainly write, like a bookings app. You can gather up all requests and then process them when the system is back online, with no downtime at all.

Conclusion

Database migrations can be a daunting task, especially when it is between vendors and technologies. But there are adequate tools to aid you in your migration task. Prepare well, do plenty of dry-runs, have a solid checklist for the live run and have a back-up plan. Take plenty of good backups of databases and communicate well with the stakeholders so everybody knows what is happening. Don’t be tempted to do too many changes in one go. Leave as much as you can for after the migration so that to make the initial; jump easier to manage and more manageable. After the migration you can do schema changes in small steps. Have fun!